Computer Languages

Contents

Computer Languages¶

A programming language is a kind of language. An algorithm is also a kind of language.

Programming Languages¶

Programming languages each have their own alphabet. These could be made up of single letters like “a” or special symbols like “if” and “+”.

\( \begin{align} \Sigma_{\text{Python3}} =& \left\{ a, b, c, \cdots, \text{if} , \text{while}, \cdots, \text{+}, \cdots \right\} \end{align} \)

We also have grammer rules. It would be wrong to say x=x---+-7 in Python.

If the code you write in a programming language can be compiled or interpreted, then we would say it is accepted by the language. It might not do what you want, but you communicated successfully and the computer understood you. If your code gets a syntax error, then it is rejected by the language.

If you want to learn more about Programming Language Theory a place to start is here.

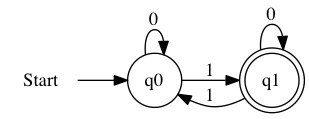

Problem Languages¶

An algorithm can also be thought of as a kind of language. The algorithm takes some kind of string as input. We have already seen that everything is really binary. It doesn’t matter if your algorithm runs on text, pictures, etc. It is at root being given a bunch of binary data. We can imagine this data as a string.

Once the algorithm has its input, it runs. One of two things must happen. It either succeeds in its task or it fails. If the algorithm succceeds in its task, we say the input is accepted. If the algorithm fails, we say the input is rejected.

Everything we said about programming languages is just a specific version of this idea. A compiler is a program. It takes a source code file (really a big number in binary) as input. If the source code file is part of the language, it builds a program. If the source code file is not part of the language, it rejects and displays an error.

We can expand this to any algorithm. Imagine we have an algorithm for computing the exponent exp(a,b). There are a set of inputs where the algorithm is successful. We could also provide inputs that causes the program to crash or overflow. The input would be rejected in this case.

A problem is a language. A solution to the problem is an input that solves the problem. An invalid solution is an input that is reject, because it does not solve the problem. Studying the kinds of languages computers can recognize is an entire field called the Theory of Computation.